Performance Optimisation and Productivity

Comparison among stand-alone version and dlb (MN5)

In this experiment we run the default configuration of the DLB-Pils kernel in the Marenostrum 5 GPP (General Purpose Partition). The system includes 6408 nodes, each one is configures with 2x Intel Sapphire Rapids 8480+ at 2Ghz and 56 cores each, 256 GB of DDR5 Main memory.

For the initial experiment we used the default configuration of the cluster, with the following modules already loaded:

1) intel/2023.2.0 2) impi/2021.10.0 3) mkl/2023.2.0

4) ucx/1.15.0 5) oneapi/2023.2.0 6) bsc/1.0

Makefile will use mpicc=icc, Intel compiler 2023.2.0. The MPI library version

was 2021.10.0.

Benchmark execution

Once the program is built, we run pils with the default parameters in the

run.sh script (i.e., pils --loads 2000,10000 --grain 0.1 --iterations 5

--task-duration 500). It creates two MPI ranks executing 2,000 and 10,000

tasks respectively, each of the task lasting for 500 usecs; so the ranks will

execute 2,000x500 = 1,000,000 usecs and 10,000x500 = 5,000,000 usecs

respectively. The program iterates 5 times:

LB: 0.60

0: Load = 2000, BS = 200

1: Load = 10000, BS = 1000

0: Local time: 1.000

1: Local time: 5.002

0: Local time: 2.001

1: Local time: 10.003

0: Local time: 3.001

1: Local time: 15.005

0: Local time: 4.001

1: Local time: 20.007

0: Local time: 5.002

1: Local time: 25.008

Total CPU time: 30.010 s

Application time = 12.5 s

The second experiment involves the use of the DLB version of the benchmark. In

this case, the source code has been slightly modified to include the DLB header

file, and calling DLB_Borrow() just before each parallel region. In order to

compile the program, we need to define the DLB_HOME environment variable

pointing to a valid installation of the DLB library. Before executing the

program we need to make sure to also preload the library (in the benchmark,

also included in the running script).

Executing the program, with exactly the same parameters used in the first experiments, produces the following output:

LB: 0.60

0: Load = 2000, BS = 200

1: Load = 10000, BS = 1000

0: Local time: 1.000

1: Local time: 5.002

0: Local time: 2.001

1: Local time: 10.004

0: Local time: 3.001

1: Local time: 15.006

0: Local time: 4.002

1: Local time: 20.008

0: Local time: 5.002

1: Local time: 25.009

Total CPU time: 30.012 s

Application time = 7.5 s

We can notice that the local observed time per iteration is almost the same, with a total time of 30.01 seconds in both experiments, while the elapsed time has been reduced from 12.5 secods to 7.5 seconds.

Instrumentation

Getting Paraver traces with DLB-Pils is also easy. You will need to enable the

Extrae library (i.e., having a valid installation of this library with the

EXTRAE_HOME environment variable pointing to it). Then, you will need to

preload the corresponding version of this library before executing the program.

The run.sh script is already taking care of this proces by setting the

TRACE variable to 1 in the header of the script.

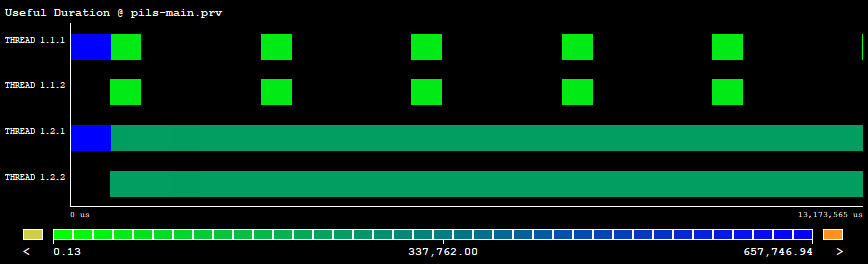

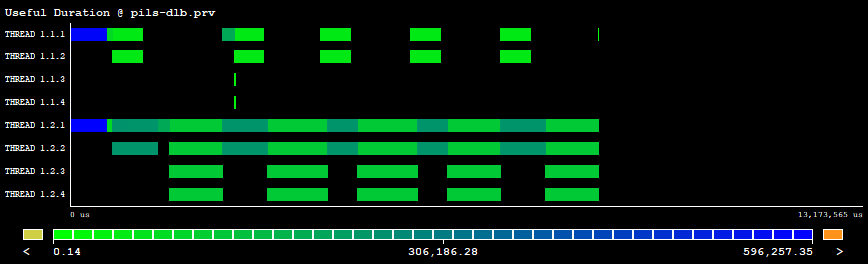

Figure 1 is the result of executing DLB-Pils without DLB, while Figure 2 is the result of enabling this component at runtime.

|

|---|

| Figure 1: Useful duration executing dlb-pils without DLB. |

You can see how in Figure 1 the different ranks have different loads in the 5 executed iterations. Threads in rank 0 execute their part of the job and becomes idle the rest of the time (the intermediate black regions). In the other hand, threads in rank 1 are always busy during the whole exeuction.

|

|---|

| Figure 2: Useful duration executing dlb-pils with DLB enabled. |

In Figure 2 we can see how DLB leverages the idle stages from the first rank to enable the 2 additional threads which now appear for each rank.