Performance Optimisation and Productivity

Chunk/task grain-size trade-off (parallelism/overhead)

Pattern addressed: Very fine-grained tasks/chunksThe essential purpose of parallel programming is to reduce the runtime by dividing time-demanding computations among available resources. The granularity is an important aspect that is often overlooked. The amount of work assigned to a computational core/thread has to have a reasonable size so that the overhead caused by thread/core control does not degrade the performance. When the tasks or chunks are too small, this overhead can cost the same or even more time than the computation itself. In such a case, running a program in parallel may not bring any benefit or even can consume more time and resources than running as a single thread application. (more...)

Required condition: A large number of iterations - number of iteration is significantly larger (many times) than the number of threads.

A trade-off between parallelism and overhead in terms of the POP metrics corresponds to balancing the Load Balance and Communication efficiency. In general, we aim to create enough chunks (parallelism) in order to utilize all available computational resources (threads). At the same time, we need to keep the related overhead low. Less overhead is reflected by higher Communication efficiency. On the other hand, scheduling of threads at the runtime is often needed in order to balance the workload effectively. This leads to better Load Balance at the cost of the lower Communication Efficiency.

It is important to ensure large enough chunks/tasks in order to decrease the effect of the overhead. A reasonable amount of the overhead should be less than 10% of the total runtime. Less is better. Having the overhead under 5% of the total runtime is more favorable. For example: if a computational chunk/task with the duration of 50 us is followed by a runtime scheduling that takes around 1-3 us, then the overhead is of 2-6 % only.

We may control the granularity directly by specifying the size of chunks/tasks or secondarily by specifying the total number of chunks/tasks. The size of individual chunks for the OpenMP loop construct is set using chunk_size within the schedule clause and for taskloop construct using the clause grainsize. For the taskloop construct, one can directly specify the total number of tasks using the num_tasks clause. While for the loop construct, to specify the total number of chunks, one has to compute the appropriate chunk_size number based on chunk_size = (nIters / nChunks) + 1. These two variants are described in more detail below.

Based on iteration duration (number of iterations)

The number of iterations within a chunk/task should be specified based on the duration of individual iterations such that the duration of the chunk/task is at least 50 us, which ensures a reasonable overhead. This approach requires a knowledge of the iteration duration, which probably has to be obtained from a measurement provided by the application itself or using some external performance tool. Then the chunk_size is computed as chunk_size = 50 / t_it, where t_it is the duration of an iteration (average value) and 50 corresponds to the required duration of a chunk.

Changing the size of chunks/tasks may affect also other performance aspects, for example, the IPC. Therefore, it is better to repeat the measurement after setting the chunk_size and recompute the value again. This procedure may be repeated a few times.

Setting the chunk_size

- measure avg chunk time

t_chunkand compute avg time per one iteration,t_it = t_chunk/chunk_size - set the

chunk_sizeaccordingly:chunk_size = 50 / t_it - repeat steps 1. and 2. several times till the measured

t_chunkis really close to 50 us.

The actual value for an optimal chunk duration depends on the hardware. Iteration runtimes distribution is a key factor that may make finding an optimal chunk_size difficult. Especially in case of large differences or a special distribution with increasing/decreasing iteration runtimes.

Based on the number of chunks

Another approach is to focus on the total number of chunks/tasks. Then the number of iterations within a chunk/task is computed in order to obtain a certain number of chunks/tasks: chunk_size = (nIters / nChunks) + 1. As we aim to distribute the workload equally among threads, it is desirable for the number of chunks to be divisible by the number of threads. Thus a fixed number of chunks is assigned to each tread (nChunksPerThread). The total number of chunks is then expressed as nChunks = nChunksPerThread * nThreads and the number of iterations within a chunk/task may be obtained as chunk_size = (nIters / (nChunksPerThread * nThreads)) + 1.

This approach is suitable for loops with identical or well-balanced iterations.

Assuming an equal workload distribution across iterations and an ideal machine without noise, this approach leads to a good Load Balance since all threads process the same number of chunks and therefore the same number of tasks/iterations. On real machines, the Load Balance is more sensitive to noise. Based on the granularity, we may choose the number of chunks per thread (nChunksPerThread) such that the duration of a chunk is longer than 50 us. Using this approach we typically assign a lower number of chunks of larger sizes to individual threads and therefore the overhead is low.

Setting the chunk_size

- decide how many chunks per thread you need/want and compute the respective chunk-size,

chunk_size = (nIters / (nChunksPerThread * nThreads)) + 1 - inspect the parallel efficiency:

- if the overhead is good but the load balance is bad, then increase the number of chunks

nChunksPerThreadand recompute thechunk_size - if the overhead is noticeable, then decrease the number of chunks

nChunksPerThreadand recompute thechunk_size

- if the overhead is good but the load balance is bad, then increase the number of chunks

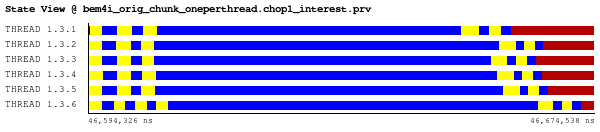

Example 1: one chunk per thread

Applying the formula above, the chunk_size was set to 4654 iterations. Then the total runtime of the loop is 80 us. Respective State view is presented in the figure below. Taking only one chunk per thread is not optimal regarding the Load balance - we can see the value gets lower to 92%, but the total runtime of the loop is significantly shorter.

The duration of the long chunks is around 54 us. the average Scheduling and Fork/Join state duration is 1.9 us. Therefore, the overhead takes around 4% of the total runtime. Additional parallel efficiency degradation is caused by the synchronization (implicit barrier) at the end of the loop. Due to the short duration of computations in the loop, this synchronization is noticeable. IPC value is equal to 1.58.

| Parallel eff. | 0.77 |

|---|---|

| - Load Balance | 0.92 |

| - Communication eff. | 0.84 |

| Other statistics | |

|---|---|

| Runtime | 80 us |

| IPC | 1.58 |

| # Instructions | 16799655 |

| Frequency | 2.86 GHz |

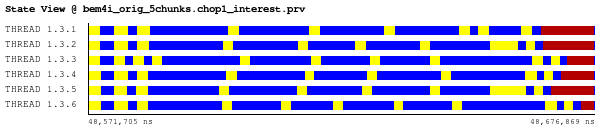

Example 2: five chunks per thread

For the next example, we assign five chunks to each thread, therefore the chunk_size is set to 931 iterations. The total runtime of the loop is 105 us. Respective State view is presented in the figure below. The Load Balance is 94%, while the Communication efficiency is 78% (with the synchronization state included).

The average chunk duration is 12 us, the average Scheduling and Fork/Join state duration is 1.9 us. Therefore, the overhead takes around 15% of the total runtime. Additional degradation of the parallel efficiency is caused by the synchronization (implicit barrier) at the end of the loop.

| Parallel eff. | 0.73 |

|---|---|

| - Load Balance | 0.94 |

| - Communication eff. | 0.78 |

| Other statistics | |

|---|---|

| Runtime | 105 us |

| IPC | 1.28 |

| # Instructions | 1716622 |

| Frequency | 2.92 GHz |

Recommended in program(s): BEM4I miniApp ·

Implemented in program(s): BEM4I miniApp (Chunksize 500) · BEM4I miniApp (One chunk per thread) ·