Performance Optimisation and Productivity

Collapse consecutive parallel regions

Pattern addressed: Sequence of fine grain parallel loopsThis pattern applies to parallel programming models based on a shared memory environment and the fork-join execution model (e.g., OpenMP). The execution of this kind of applications is initialy sequential (i.e., only one thread starts the execution of the whole program), and just when arriving at the region of the code containing potential parallelism, a new parallel region is created and multiple threads will start the execution of the associated code. Parallel execution usually will distribute the code among participating threads by means of work-sharing directives (e.g., a loop directive will distribute the loop iteration space among all threads participating in that region). (more...)

Required condition: When no upper-level parallelizable loop exists

The main idea behind collapsing parallel regions is to reduce the overhead of the fork-join phases. This technique consists on substituting a sequence of parallel work-sharing regions with a single parallel region and multiple inner work-sharing constructs.

The resulting code will be:

void code_A (double *A, int size, int n)

{

#pragma omp parallel

{

for (int it=0; i<iters; iters++) {

#pragma omp for [schedule(static) nowait]

for (int i=0; i<size; i++) {

A[i] += f();

}

}

}

}

void code_B (double *A, int size)

{

#pragma omp parallel

{

#pragma omp for [schedule(static) nowait]

for (int i=0; i<size; i++) {

A[i] += f();

}

#pragma omp single [nowait]

S();

#pragma omp for [schedule(static) nowait]

for (int i=0; i<size; i++) {

A[i] += g();

}

#pragma omp single [nowait]

S();

#pragma omp for [schedule(static) nowait]

for (int i=0; i<size; i++) {

A[i] += h();

}

}

}

In the code_A function, the transformation consists on placing the parallel region enclosing the outermost loop; while in code_B function, the transformation consists on creating a parallel region enclosing the three work-sharing loops. In addition, this best-practice also allows the possibility of removing work-sharing barriers (see the code in square brackets), which are mandatory in the parallel region. The schedule clause with the static parameter guarantees that all the threads will work on the same sub-iteration space, and the ‘nowait’ clause avoids the implicit barrier at the end of the construct.

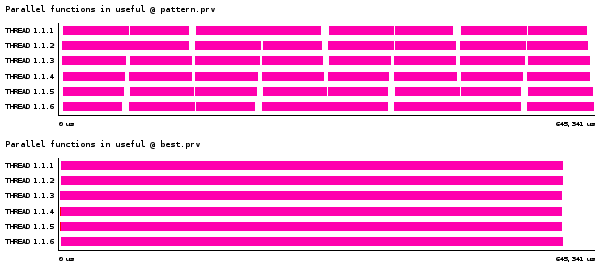

Following traces compare the execution of multiple parallel regions with worksharing constructs (top) and a single parallel region with internal work-sharing constructs (bottom). Both traces are shown using the same time duration.

The best-practice reduces the overhead of creating multiple parallel region and it also compensate the micro-imbalance that may occur within each parallelized loop.

Recommended in program(s): JuKKR kloop (original) ·