Performance Optimisation and Productivity

Avoidable transfers between host and GPU for MPI communication (GPU-Unaware MPI)

Usual symptom(s):- Device Communication Efficiency: The Device Communication Efficiency (DevCommE) is defined as the ratio between the maximum useful computation time across all accelerator devices and the maximum (also across all accelerator devices) combined useful computation time and time waiting for data. (more...)

- Device Offload Efficiency: The Device Offload Efficiency (DOE) is defined as the ratio between the total useful computation time on the used CPUs and the sum of that time and the total host idle time (summed over all CPUs) that is related to managing the targeted accelerator devices. (more...)

When GPUs perform computation in an MPI program and multiple nodes are involved, data exchange between GPUs across different nodes must still be orchestrated by the host, as MPI generally lacks proper GPU support. This host communication can lead to unnecessary transfers of data between the host and GPU, which can be optimized or completely avoided by using GPU-aware features provided by common MPI libraries.

Consider the following snippet where data located on the host is distributed via MPI (here using MPI_Bcast) just to be copied to the GPU on arrival for further processing. Afterwards, the processed data is copied back to the host in order to accumulate the results via MPI (here using MPI_Gather). If the computation should be repeated multiple times with updated data that needs to be exchanged between computations, memory needs to be transferred back and forth between host and GPU as well, although the host is not actually involved in processing the data.

cudaMalloc(&device_buf, size);

for (int i = 0; i < NUM_ITERATIONS; i++) {

MPI_Bcast(host_buf, ...);

cudaMemcpy(device_buf, host_buf, size, cudaMemcpyHostToDevice);

kernel<<<...>>>(device_buf);

cudaMemcpy(host_buf, device_buf, size, cudaMemcpyDeviceToHost);

MPI_Gather(host_buf, ...);

}

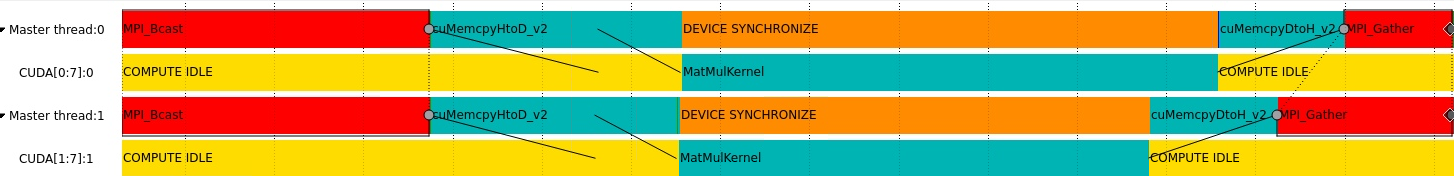

The trace below shows the sequence of operations for a single iteration for two MPI processes, each maintaining one GPU. It can be seen that a significant proportion of the runtime is spent in host to device and device to host memory transfers. The arrows indicate the dependency between the kernel execution and memory transfers.